Generative Music

2017 - present

An experiment in creating a generative music system (basically: teaching my computer how to compose ambient music), inspired by some of Brian Eno’s work. Especially the album Reflection and the app Bloom that he made in collaboration with Peter Chilvers.

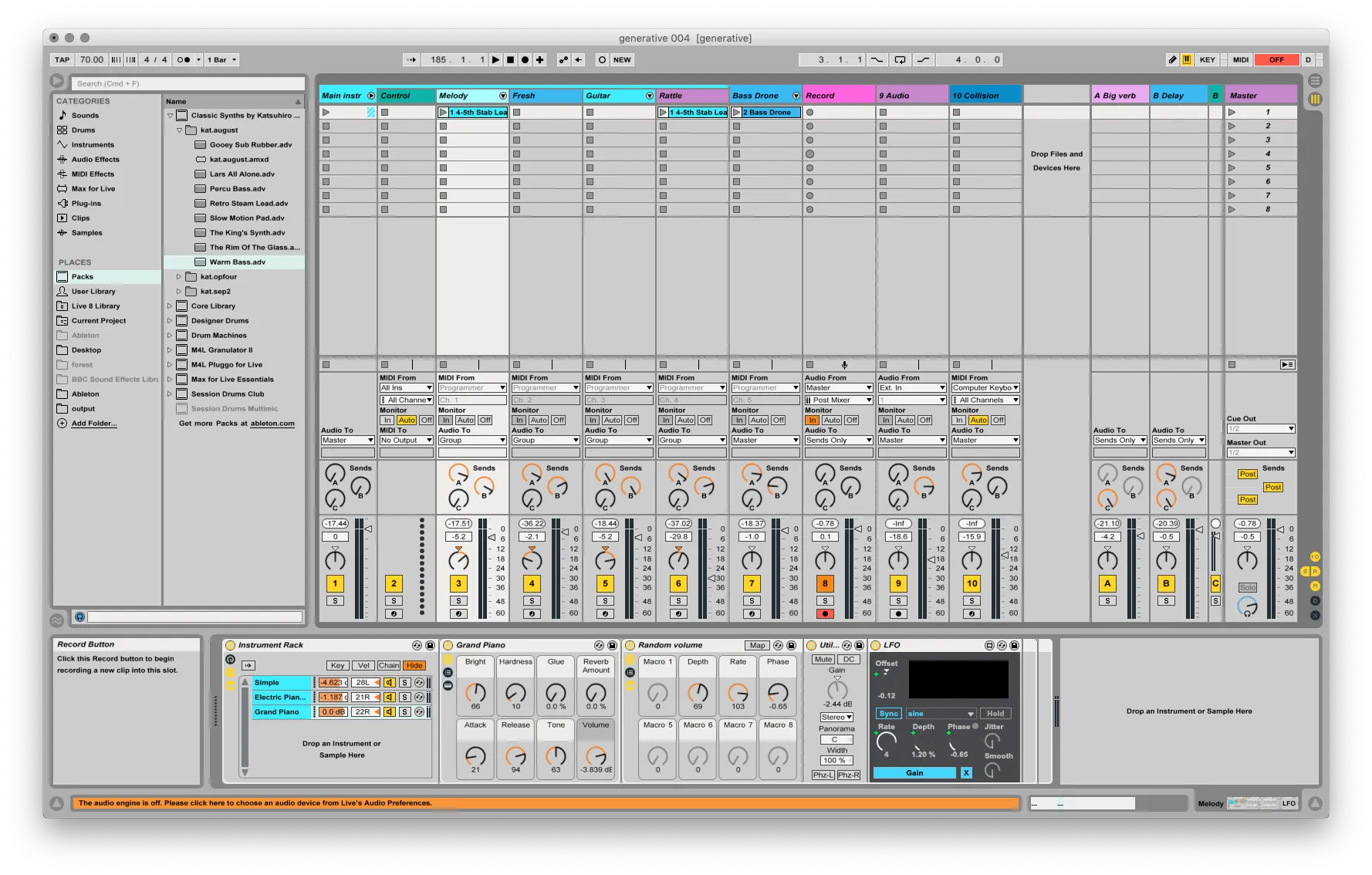

Basically, I’ve created an application that pretends to be a MIDI keyboard, sending notes to my DAW (Digital Audio Workstation) of choice, Ableton Live, which then transforms the notes to sounds played by virtual instruments.

How it started

I’ve tried similar things like this many times in the last 10 years or so, but I never really managed to get something working until I started this in 2017. I’ve been working on it on-and off since then.

The initial version of it was built entirely in the browser with Javascript and Tone.js (you can listen to the actual, infinite version or listen to a recording on SoundCloud).

In the end, though, this didn’t give me enough “sonic” control over the output, and the effects like reverbs and delays that the browser audio APIs give you often have limits that you don’t get with DAWs like Ableton Live, Protools, and Logic.

The next iteration used MIDI to send notes and other events to my DAW of choice (Ableton Live). Because my browser of choice (Firefox) didn’t support the Web MIDI API yet, I chose to run the app in Node JS instead of the browser. In the months after I expanded the code a lot.

How it works

Like with other forms of creative coding, you can’t just create random garbage, so the first thing to do is coming up with the rules and constraints of the app, playing with those, and then improve. In this case, there’s music theory.

In initial versions, I hardcoded the MIDI notes that the application was allowed to use across different octaves, but I quickly outsourced this to music theory libraries like Tonal, which made it easier to get the available notes and chords in a key, and to convert them to the MIDI values I need to send to Ableton.

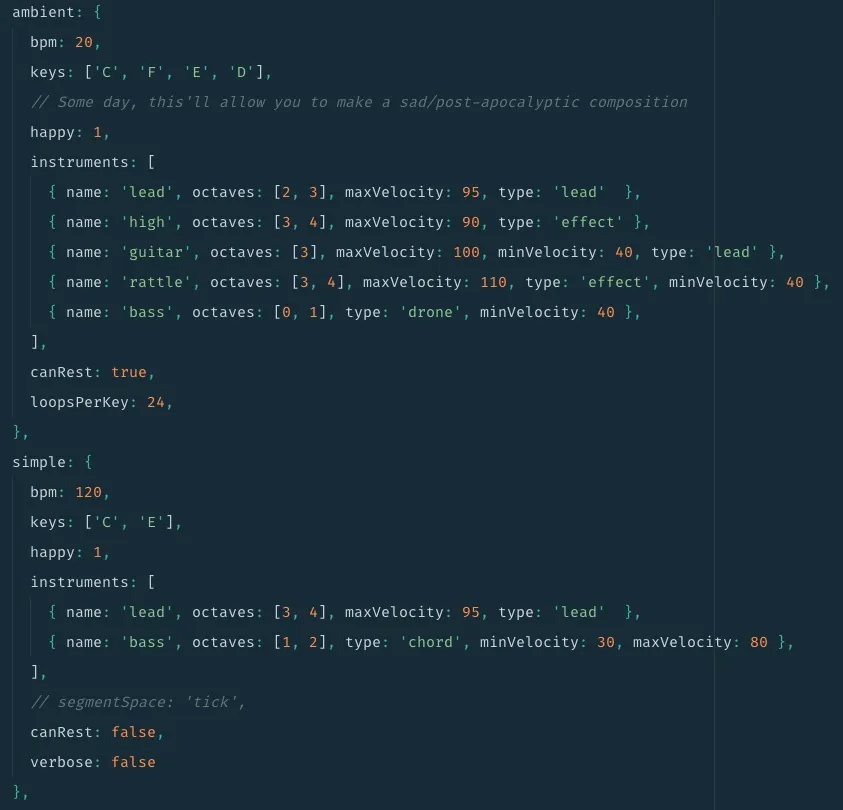

I’ve set up the system in such a way that I can configure different instruments with different behaviours:

Segments

The notes are generated in segments, after each segment has finished, it generates a new one. The lengths of the can be 4, 8, or 16 ‘beats’ long.

Each segment has an assigned chord based on the musical key that the current composition is in, and each of the instruments in the segment will limit their notes to that chord.

The used chords change, based on a chord progression that’s decided at the start of the composition.

Note generators

It is the note generator’s job to figure out which notes that will be played during the segment. This is dependent on the type of the instrument, but the lead instrument is the more interesting one, because it plays the main melody.

There are currently three different note generators, and they are picked at random:

- Random: This is not truly random, but it picks a note by taking the last played note, and adding or subtracting a commonly used amount of “steps”. This way it generates a string of notes within the key without any radical shifts. I did program it so that it’s more likely for the melody to go upwards.

- Arpeggio: Based on the current segment’s chord, it will pick from multiple common arpeggio patterns.

- Manual: Picks notes based on a manually entered melody (fixed to the current key and based on the previous note)

Mutations

After the notes have been generated, they go through a mutation step, which can randomly add multiple different types of mutations to each note. There are a lot of them, but here are a few interesting ones:

- Randomising the velocity: This mutation always happens, and changes the velocity (i.e loudness/hardness) of the note.

- Duplicating the note with another instrument

- Duplicating the note in another octave

- Deactivating a note

- Changing the length of the note (to add some dynamics to the melody)

- Adding a MIDI control pulse to the note.

Midi control pulse

One of the mutations can trigger a sequence of MIDI control event at the same time of the note. This is equivalent to changing a slider on MIDI keyboards or control devices, an event that you can connect to all kinds of parameters in your DAW.

In this case, I use it to temporarily send an instrument’s sound to a delay plugin (with the volume slowly decaying over the course of a few beats). In practical terms, that means that some notes will be repeat and hang around for a while in the background.

The “composition”

Although I can probably infinitely tweak the note generation code, I can also infinitely tweak the audio side of it. Ableton Live receives the MIDI notes generated by my generative music app, after which I can assign different MIDI instruments to each instrument used in my code. Then comes the process of finding the right instrument and effects.

I also assign certain parameters (such as volume, or low-pass filters in equalisers) to Low Frequency Oscillators, which means that there is a bit of a changing soundscape over time. Some of these LFO’s are very slow (13 seconds, for example).

Output

The script operates in two modes, either live (running infinitely until stopped), and MIDI. The MIDI mode generates a fixed-length MIDI file. That gives me the ability to generate a MIDI file of a fixed length (e.g. 1 hour) that I can drag into Ableton to generate file faster than realtime.